If you’ve ever trained a machine learning model from scratch, you know the pain: enormous datasets, hours (or days) of computation, and the constant uncertainty of whether the model will even perform well in the end.

So what if instead of starting from zero, you could borrow knowledge from a model that has already learned something useful?

That’s exactly what transfer learning techniques make possible — and it’s one of the biggest reasons deep learning has become accessible beyond big tech labs.

In this guide, we’ll break down what transfer learning is, why it matters, how it works, and how you can apply it confidently even if you’re not a machine learning expert.

We’ll also cover tools, pitfalls, real-world examples, and practical steps you can follow today.

What Are Transfer Learning Techniques? (A Beginner-Friendly Explanation)

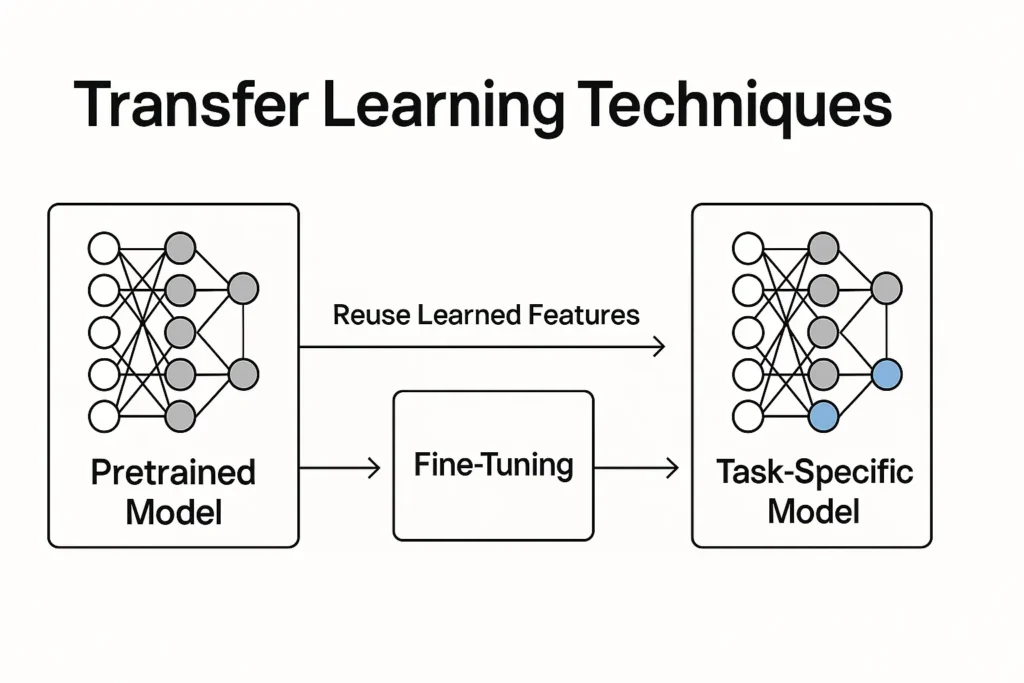

At its core, transfer learning is a method where you take a model trained on one task and adapt it to a different (but related) task.

Think of it like learning to play the guitar before learning the ukulele. You already know rhythm, chords, and hand coordination — you’re not starting from scratch. You “transfer” what you learned.

In machine learning, instead of training a deep neural network from a blank slate, you:

- Start with a pretrained model (trained on a huge dataset, often requiring millions of dollars of compute).

- Reuse its learned representations — patterns, features, or embeddings.

- Fine-tune or adapt it to a specific problem you care about.

This dramatically reduces the data, time, and resources you need.

Examples of pretrained models you’ve likely heard of:

- ImageNet-trained CNNs (e.g., ResNet, VGG)

- Large Language Models (LLMs) (e.g., BERT, GPT)

- Audio models like Wav2Vec

- Multimodal models like CLIP

These models already “understand” the world in some way. Transfer learning lets you leverage that understanding.

Why Transfer Learning Techniques Matter

Transfer learning is popular for one very practical reason: it works unbelievably well with minimal effort.

Here’s why thousands of teams rely on it:

1. You Need Far Less Data

Traditional deep learning might require hundreds of thousands of labeled images. With transfer learning?

Sometimes 500 or even 50 samples are enough.

2. Faster Training Times

Models that would take days to train from scratch can be fine-tuned in minutes or hours.

3. Significantly Better Accuracy

Pretrained models capture patterns that you simply can’t replicate with small datasets.

This often leads to higher accuracy, even on niche tasks.

4. Works Across Multiple Domains

Transfer learning shines in:

- Computer vision

- NLP

- Speech recognition

- Recommender systems

- Medical classification

- Fraud detection

If you’re working in AI, there’s a 90% chance transfer learning can make your job easier.

How Transfer Learning Techniques Work (Step-by-Step)

Let’s break down the process in a simple, human way.

Step 1: Choose a Suitable Pretrained Model

This depends on your domain:

- Image tasks → ResNet, EfficientNet, MobileNet

- Text tasks → BERT, RoBERTa, GPT, T5

- Audio tasks → Whisper, Wav2Vec

- Multimodal tasks → CLIP, Flamingo

Choosing the right foundation model is half the battle.

Step 2: Freeze or Modify Layers

There are three common strategies:

A. Feature Extraction

- Most pretrained layers remain frozen.

- Only the last few layers are retrained.

- Works best when your dataset is small.

B. Fine-Tuning

- Unfreeze more layers (or all of them).

- Useful when your data is moderately different from the original training domain.

C. Transfer + Custom Head

- Replace the final classification/regression layer with your own.

- Common in image classification and NLP.

Step 3: Train on Your Dataset

Training typically involves:

- Lower learning rates

- Early stopping

- Regularization

- Data augmentation (especially in vision tasks)

Because the model already has strong “prior knowledge,” the training converges quickly.

Step 4: Evaluate and Iterate

Check metrics such as:

- Accuracy / F1 score

- Precision and recall

- Confusion matrices

- ROC-AUC for classification

Fine-tune as needed.

Benefits & Real-World Use Cases of Transfer Learning

Transfer learning techniques are behind many AI breakthroughs — even ones you interact with daily.

1. Healthcare Imaging (Life-Saving Accuracy)

Radiologists use transfer learning to detect:

- Tumors

- Pneumonia

- Heart abnormalities

Because ImageNet models already understand edges, textures, and shapes, they adapt extremely well to medical imaging — even with small datasets.

2. Natural Language Processing (Powering Chatbots & Search)

Companies don’t train LLMs from scratch. They:

- Start with BERT/GPT

- Fine-tune on domain-specific text (finance, medicine, customer support)

This enables extremely accurate:

- Sentiment analysis

- Named entity recognition

- Chatbots

- Document summarization

3. E-commerce Recommendations

Deep models pretrained on general browsing behavior can be fine-tuned to predict:

- Purchases

- Interests

- Churn

- Cross-sell opportunities

4. Autonomous Vehicles

Transfer learning speeds up:

- Object detection

- Lane detection

- Pedestrian recognition

5. Fraud and Anomaly Detection

Pretrained sequence models help banks detect unusual transaction patterns with very little additional training.

In short: any industry that wants AI without a million-dollar compute budget uses transfer learning.

Types of Transfer Learning Techniques

Transfer learning isn’t one technique — it’s an ecosystem. Here are the core types:

1. Inductive Transfer Learning

- Target task is different from the source task.

- Most common type.

- Example: Pretrained ImageNet model → Plant disease classifier.

2. Transductive Transfer Learning

- Tasks are the same, but domains differ.

- Example: Classifying emails, but source data is English and target data is French.

3. Unsupervised Transfer Learning

- Pretrained model learns general representations (embeddings).

- Adapted for new tasks without labels.

- Example: Using autoencoders to learn features → clustering customers.

4. Multitask Transfer Learning

- Learn several tasks at once.

- Improves generalization.

- Used heavily in NLP and multimodal AI.

5. Domain Adaptation

- Focuses on adjusting a model so it performs well in a new domain with different distributions.

- Example: From synthetic driving data → real-world driving videos.

A Practical Step-by-Step Guide to Applying Transfer Learning

Here’s a clear blueprint you can follow:

Step 1: Define Your Task

Is it:

- Classification?

- Regression?

- Clustering?

- Sequence labeling?

This helps determine the right model architecture.

Step 2: Gather & Prepare Your Dataset

Transfer learning works best when:

- Data is cleaned

- Labels are consistent

- You have at least a small representative sample

Step 3: Choose Your Pretrained Model

Use model hubs like:

- Hugging Face

- TensorFlow Hub

- PyTorch Hub

Look for models with:

- High benchmark scores

- Good documentation

- Active community support

Step 4: Decide on Transfer Strategy

Ask yourself:

- “Is my new task similar to the original one?”

- “Do I have a small dataset?”

This determines whether you should:

- Freeze layers

- Fine-tune

- Replace the final head

Step 5: Train Carefully

Use:

- Low learning rates (1e-4 to 1e-6)

- Early stopping

- Dropout

- Gradient clipping

Step 6: Evaluate Honestly

Use multiple metrics, not just accuracy.

Step 7: Deploy & Monitor

Models can drift over time. Plan to:

- Retrain periodically

- Collect feedback data

- Test edge cases

Tools, Frameworks & Platform Recommendations

You don’t need to build everything manually. Plenty of tools simplify transfer learning.

Best Tools for Transfer Learning

1. TensorFlow/Keras

Great for beginners and production.

Pros:

- Simple API

- Many pretrained models

- Excellent documentation

Cons:

- Can be slower in research environments

2. PyTorch

Most popular in research and industry.

Pros:

- Flexible

- Hugging Face integration

- Strong community

Cons:

- Slightly steeper learning curve

3. Hugging Face Transformers

The gold standard for NLP and multimodal models.

Pros:

- Thousands of pretrained models

- Easy fine-tuning

- Incredibly active ecosystem

Cons:

- Models can be large

4. Fast.ai

Great for fast prototyping.

Pros:

- Very beginner-friendly

- High-level abstractions

Cons:

- Less control compared to PyTorch

Common Mistakes People Make With Transfer Learning (And How to Fix Them)

Mistake 1: Using the Wrong Pretrained Model

Fix:

Choose a source model as close as possible to your domain.

Mistake 2: Unfreezing All Layers Too Soon

Fix:

Start with frozen layers → unfreeze gradually.

Mistake 3: Using a High Learning Rate

Fix:

Fine-tuning requires learning rates 10–100x smaller than training from scratch.

Mistake 4: Not Matching Input Formats

Fix:

Check:

- Tokenization

- Image preprocessing

- Sampling rates

Mistake 5: Overfitting on Small Datasets

Fixes:

- Data augmentation

- Early stopping

- Regularization

- Smaller model head

Mistake 6: Forgetting Domain Shift Issues

Fix:

Use domain adaptation techniques such as:

- Adding noise

- Using synthetic data

- Normalization adjustments

Conclusion

Transfer learning techniques have revolutionized the way we build machine learning systems.

Instead of starting from zero, you stand on the shoulders of giant pretrained models that already understand images, language, audio, or multimodal information.

Whether you’re building a niche classifier, a chatbot, a fraud detection system, or a recommendation engine, transfer learning allows you to:

- Train faster

- Use less data

- Achieve better accuracy

- Spend less money

If you’re working in AI — or planning to — mastering transfer learning is one of the most valuable skills you can add to your toolkit.

If you have questions or want hands-on examples, drop a comment or explore more AI tutorials!

FAQs

What is transfer learning in simple terms?

Transfer learning means taking a model trained on one task and reusing its knowledge for a different task. It saves time, data, and compute.

When should I use transfer learning?

Use it when your dataset is small, your compute is limited, or your task is related to a well-studied domain like vision or NLP.

Do I always need to fine-tune the whole model?

No. Often you only need to train the final layers. Full fine-tuning is needed only when your new dataset differs significantly from the original.

Is transfer learning only for deep learning?

It’s most common in deep learning, but the concept exists in classical ML too (e.g., domain adaptation).

What is feature extraction vs fine-tuning?

Feature extraction freezes most layers; fine-tuning updates many or all layers. Feature extraction is faster; fine-tuning provides higher accuracy

Adrian Cole is a technology researcher and AI content specialist with more than seven years of experience studying automation, machine learning models, and digital innovation. He has worked with multiple tech startups as a consultant, helping them adopt smarter tools and build data-driven systems. Adrian writes simple, clear, and practical explanations of complex tech topics so readers can easily understand the future of AI.